Shadow Mapping with SlimDX and DirectX 11

Shadow mapping is a technique to cast shadows from arbitrary objects onto arbitrary 3D surfaces. You may recall that we implemented planar shadows earlier using the stencil buffer. Although this technique worked well for rendering shadows onto planar (flat) surfaces, this technique does not work well when we want to cast shadows onto curved or irregular surfaces, which renders it of relatively little use. Shadow mapping gets around these limitations by rendering the scene from the perspective of a light and saving the depth information into a texture called a shadow map. Then, when we are rendering our scene to the backbuffer, in the pixel shader, we determine the depth value of the pixel being rendered, relative to the light position, and compare it to a sampled value from the shadow map. If the computed value is greater than the sampled value, then the pixel being rendered is not visible from the light, and so the pixel is in shadow, and we do not compute the diffuse and specular lighting for the pixel; otherwise, we render the pixel as normal. Using a simple point sampling technique for shadow mapping results in very hard, aliased shadows: a pixel is either in shadow or lit; therefore, we will use a sampling technique known as percentage closer filtering (PCF), which uses a box filter to determine how shadowed the pixel is. This allows us to render partially shadowed pixels, which results in softer shadow edges.

This example is based on the example from Chapter 21 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . The full source for this example can be downloaded from my GitHub repository at https://github.com/ericrrichards/dx11.git, under the ShadowDemos project.

Rendering Scene Depth to a Texture

To generate the shadow map, we need to render the scene depth from the perspective of our light into the shadow map texture. To do this, we will need to create the shadow map texture, and create a depth/stencil view that we can bind to our DeviceContext. We also need to create a ShaderResourceView for this same texture, so that we can feed the shadow map into our main shader. We also need to create a viewport which matches the dimensions of our shadow map texture, since our shadow map will rarely match the dimensions of our main backbuffer. To simplify this operation, we will create a ShadowMap class which encapsulates these views and viewport, and provides functionality to bind the shadow map texture to our DeviceContext’s depth target. The definition of the ShadowMap class looks like this:

public class ShadowMap : DisposableClass { private bool _disposed; private readonly int _width; private readonly int _height; private DepthStencilView _depthMapDSV; private readonly Viewport _viewport; private ShaderResourceView _depthMapSRV; public ShaderResourceView DepthMapSRV { get { return _depthMapSRV; } private set { _depthMapSRV = value; } } }

To create a ShadowMap, we need to provide our Device and the height and width of the desired shadow map texture. We then generate a viewport that matches the dimensions of the desired shadow map texture. Next, we create the shadow map texture. We will use the R24G8_TYPELESS format, since we will be binding this texture to both the DeviceContext’s depth/stencil buffer and as a shader resource to our main shader; the depth/stencil view will interpret the texture as a standard D24S8 depth buffer, while the shader will interpret the depth information using a R24X8 format. Since we are using this texture for both purposes, we need to use the bind flags DepthStencil and ShaderResource. After creating the texture, we need to create the DepthStencilView and ShaderResourceView from it. Finally, we release the texture reference, to avoid leaking memory; the underlying texture object will now be managed by the Views that we have created.

public ShadowMap(Device device, int width, int height) { _width = width; _height = height; _viewport = new Viewport(0, 0, _width, _height, 0, 1.0f); var texDesc = new Texture2DDescription { Width = _width, Height = _height, MipLevels = 1, ArraySize = 1, Format = Format.R24G8_Typeless, SampleDescription = new SampleDescription(1, 0), Usage = ResourceUsage.Default, BindFlags = BindFlags.DepthStencil | BindFlags.ShaderResource , CpuAccessFlags = CpuAccessFlags.None, OptionFlags = ResourceOptionFlags.None }; var depthMap = new Texture2D(device, texDesc); var dsvDesc = new DepthStencilViewDescription { Flags = DepthStencilViewFlags.None, Format = Format.D24_UNorm_S8_UInt, Dimension = DepthStencilViewDimension.Texture2D, MipSlice = 0 }; _depthMapDSV = new DepthStencilView(device, depthMap, dsvDesc); var srvDesc = new ShaderResourceViewDescription { Format = Format.R24_UNorm_X8_Typeless, Dimension = ShaderResourceViewDimension.Texture2D, MipLevels = texDesc.MipLevels, MostDetailedMip = 0 }; DepthMapSRV = new ShaderResourceView(device, depthMap, srvDesc); Util.ReleaseCom(ref depthMap); }

To use our ShadowMap class, we need to provide a method to bind the ShadowMap depth/stencil buffer and viewport to our DeviceContext. We bind a null render target, since we only care about the scene depth information. This also allows us to take advantage of the fact that most GPUs are optimized to render depth-only; except in the situation where we want to use alpha clipping, such as for billboarding, we can omit the pixel shader stage completely.

public void BindDsvAndSetNullRenderTarget(DeviceContext dc) { dc.Rasterizer.SetViewports(_viewport); dc.OutputMerger.SetTargets(_depthMapDSV, (RenderTargetView)null); dc.ClearDepthStencilView(_depthMapDSV, DepthStencilClearFlags.Depth|DepthStencilClearFlags.Stencil, 1.0f, 0); }

Lastly, you may have noticed that we subclassed our DisposableClass base type, so we need to implement a Dispose method. We need to release the DepthStencilView and ShaderResourceView that we created in order to avoid leaking memory.

protected override void Dispose(bool disposing) { if (!_disposed) { if (disposing) { Util.ReleaseCom(ref _depthMapSRV); Util.ReleaseCom(ref _depthMapDSV); } _disposed = true; } base.Dispose(disposing); }

Using the ShadowMap

Creating an instance of our ShadowMap class is very simple; we just need to use the constructor method.

// ShadowDemo class private const int SMapSize = 2048; private ShadowMap _sMap; public override bool Init() { // other initialization... _sMap = new ShadowMap(Device, SMapSize, SMapSize); }

To draw the scene depth information from the viewpoint of our light, we need to create appropriate view and projection matrices. We will also need to create a matrix that allows us to convert an object-space position into the normalized device coordinate (NDC, or [-1,+1]) space of the shadow map depth buffer. We’ll call this our shadow transform, which we will use in our main pixel shader to help us sample the correct texel of the shadow map to determine if a screen pixel is lit or in shadow.

private void BuildShadowTransform() { var lightDir = _dirLights[0].Direction; var lightPos = -2.0f * _sceneBounds.Radius * lightDir; var targetPos = _sceneBounds.Center; var up = new Vector3(0, 1, 0); var v = Matrix.LookAtLH(lightPos, targetPos, up); var sphereCenterLS = Vector3.TransformCoordinate(targetPos, v); var l = sphereCenterLS.X - _sceneBounds.Radius; var b = sphereCenterLS.Y - _sceneBounds.Radius; var n = sphereCenterLS.Z - _sceneBounds.Radius; var r = sphereCenterLS.X + _sceneBounds.Radius; var t = sphereCenterLS.Y + _sceneBounds.Radius; var f = sphereCenterLS.Z + _sceneBounds.Radius; var p = Matrix.OrthoOffCenterLH(l, r, b, t, n, f); var T = new Matrix { M11 = 0.5f, M22 = -0.5f, M33 = 1.0f, M41 = 0.5f, M42 = 0.5f, M44 = 1.0f }; var s = v * p * T; _lightView = v; _lightProj = p; _shadowTransform = s; }

Since we are using directional lights, we use an orthographic projection matrix for our shadow transform. Remember, directional lights are assumed to be infinitely far away, so all light rays originating from the light are considered parallel, and so the shadow cast by an object should be the same size as the object, that is, the volume that is in shadow is shaped like a rectangular prism. This aligns with the properties of an orthographic projection, wherein the distance of the object from the viewpoint does not alter its apparent projected size. If we wished to use spot lights to cast shadows, we would instead use a perspective projection, similar to the projection matrix we have been using for rendering; in this case, the volume in shadow would be better represented by a truncated cone or frustum.

We construct the view projection for the shadow transform in the same way we have created view projections for our camera classes. We set the target of the transform to be the center of the scene. Although a directional light does not truly have a position, we calculate the position for the transform as being backwards twice the radius of the scene along the light’s direction vector. This ensures that the entire scene will be in view when we render into our shadow map. Note that we need to compute a bounding sphere for the entirety of our scene geometry; in this case, we know how the scene is laid out, and so can construct this sphere easily in the constructor. If the scene’s layout is not well known, we would have to loop over our scene objects and construct an appropriate bounding sphere to contain all of them.

To construct the orthographic projection matrix, we transform the scene center point into the light’s view-space. Next, we calculate the bounds of the viewing prism in the light’s view-space, which is simply the center point, offset in each direction by the scene radius. To construct the projection matrix, we use the SlimDX OrthoOffCenterLH() method.

Coordinates projected by the orthographic projection matrix will be transformed into NDC space, that is, the ranges [-1,+1], [-1,+1], [-1, +1]. To use these coordinates properly for texture addressing, we need to scale and shift them, such that they end up being in the texture address space, [0,1], [0,1]. For texturing, we don’t care about the z component, so we can leave that as is. This texture-space transform is our matrix T above.

Finally, to calculate the final shadow transform, we concatenate the light, view and texture matrices. We’ll also store the view and projection matrices separately, since we will be using them to draw our scene to the shadow map.

In our example, the direction of the shadow-casting light changes over time, to highlight how the shadows change. Thus, we will be running the BuildShadowTransform() function each frame, as part of our UpdateScene() method. If your directional lights do not change, you could perform these calculations once, at initialization time.

Drawing shadows

With our shadow map created, and our shadow transforms calculated, we can then draw our scene. This will consist of two stages:

- Draw the scene depth information to the shadow map, using the light’s view and projection matrices.

- Draw the scene normally, from the camera' perspective, using the shadow map to determine which scene pixels are in shadow.

To draw the scene depth information, we will need to bind our ShadowMap depth/stencil to the DeviceContext, using the BindDsvAndSetNullRenderTarget() function, and then draw the scene using the DrawSceneToShadowMap() helper function, which we will discuss shortly.

Using our updated BasicModel class, drawing the scene normally is relatively simple. The new wrinkle is that we need to bind the ShadowMap shader texture to our effect, using a new setter function, SetShadowMap, and for each object, we need to set its shadow transform, using the SetShadowTransform setter. Each object’s shadow transform is simply its world-space transformation concatenated with the global shadow transform that we calculated in BuildShadowTransform.

public override void DrawScene() { Effects.BasicFX.SetShadowMap(null); Effects.NormalMapFX.SetShadowMap(null); _sMap.BindDsvAndSetNullRenderTarget(ImmediateContext); DrawSceneToShadowMap(); ImmediateContext.Rasterizer.State = null; ImmediateContext.OutputMerger.SetTargets(DepthStencilView, RenderTargetView); ImmediateContext.Rasterizer.SetViewports(Viewport); ImmediateContext.ClearRenderTargetView(RenderTargetView, Color.Silver); ImmediateContext.ClearDepthStencilView(DepthStencilView, DepthStencilClearFlags.Depth | DepthStencilClearFlags.Stencil, 1.0f, 0); var viewProj = _camera.ViewProj; Effects.BasicFX.SetDirLights(_dirLights); Effects.BasicFX.SetEyePosW(_camera.Position); Effects.BasicFX.SetCubeMap(_sky.CubeMapSRV); Effects.BasicFX.SetShadowMap(_sMap.DepthMapSRV); Effects.NormalMapFX.SetDirLights(_dirLights); Effects.NormalMapFX.SetEyePosW(_camera.Position); Effects.NormalMapFX.SetCubeMap(_sky.CubeMapSRV); Effects.NormalMapFX.SetShadowMap(_sMap.DepthMapSRV); var activeTech = Effects.NormalMapFX.Light3TexTech; var activeSphereTech = Effects.BasicFX.Light3ReflectTech; var activeSkullTech = Effects.BasicFX.Light3ReflectTech; ImmediateContext.InputAssembler.InputLayout = InputLayouts.PosNormalTexTan; if (Util.IsKeyDown(Keys.W)) { ImmediateContext.Rasterizer.State = RenderStates.WireframeRS; } for (var p = 0; p < activeTech.Description.PassCount; p++) { // draw grid var pass = activeTech.GetPassByIndex(p); _grid.ShadowTransform = _shadowTransform; _grid.Draw(ImmediateContext, pass, viewProj); // draw box _box.ShadowTransform = _shadowTransform; _box.Draw(ImmediateContext, pass, viewProj); // draw columns foreach (var cylinder in _cylinders) { cylinder.ShadowTransform = _shadowTransform; cylinder.Draw(ImmediateContext, pass, viewProj); } } ImmediateContext.HullShader.Set(null); ImmediateContext.DomainShader.Set(null); ImmediateContext.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList; for (var p = 0; p < activeSphereTech.Description.PassCount; p++) { var pass = activeSphereTech.GetPassByIndex(p); foreach (var sphere in _spheres) { sphere.ShadowTransform = _shadowTransform; sphere.DrawBasic(ImmediateContext, pass, viewProj); } } int stride = Basic32.Stride; const int offset = 0; ImmediateContext.Rasterizer.State = null; ImmediateContext.InputAssembler.InputLayout = InputLayouts.Basic32; ImmediateContext.InputAssembler.SetVertexBuffers(0, new VertexBufferBinding(_skullVB, stride, offset)); ImmediateContext.InputAssembler.SetIndexBuffer(_skullIB, Format.R32_UInt, 0); for (int p = 0; p < activeSkullTech.Description.PassCount; p++) { var world = _skullWorld; var wit = MathF.InverseTranspose(world); var wvp = world * viewProj; Effects.BasicFX.SetWorld(world); Effects.BasicFX.SetWorldInvTranspose(wit); Effects.BasicFX.SetWorldViewProj(wvp); Effects.BasicFX.SetShadowTransform(world * _shadowTransform); Effects.BasicFX.SetMaterial(_skullMat); activeSkullTech.GetPassByIndex(p).Apply(ImmediateContext); ImmediateContext.DrawIndexed(_skullIndexCount, 0, 0); } DrawScreenQuad(); _sky.Draw(ImmediateContext, _camera); ImmediateContext.Rasterizer.State = null; ImmediateContext.OutputMerger.DepthStencilState = null; ImmediateContext.OutputMerger.DepthStencilReference = 0; SwapChain.Present(0, PresentFlags.None); }

Drawing Scene Depth

Drawing the scene depth information is very similar to rendering the scene normally. Instead of using the camera view and projection matrices, we use the light matrices computed by BuildShadowTransform(). We also use a new BasicModelInstance method, DrawShadow(), which is similar to our Draw() method, except it omits any material and texturing information and uses a special shadow mapping effect shader, rather than our normal mapping shader.

private void DrawSceneToShadowMap() { try { var view = _lightView; var proj = _lightProj; var viewProj = view * proj; Effects.BuildShadowMapFX.SetEyePosW(_camera.Position); Effects.BuildShadowMapFX.SetViewProj(viewProj); ImmediateContext.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList; var smapTech = Effects.BuildShadowMapFX.BuildShadowMapTech; const int Offset = 0; ImmediateContext.InputAssembler.InputLayout = InputLayouts.PosNormalTexTan; if (Util.IsKeyDown(Keys.W)) { ImmediateContext.Rasterizer.State = RenderStates.WireframeRS; } for (int p = 0; p < smapTech.Description.PassCount; p++) { var pass = smapTech.GetPassByIndex(p); _grid.DrawShadow(ImmediateContext, pass, viewProj); _box.DrawShadow(ImmediateContext, pass, viewProj); foreach (var cylinder in _cylinders) { cylinder.DrawShadow(ImmediateContext, pass, viewProj); } }

for (var p = 0; p < smapTech.Description.PassCount; p++) {

var pass = smapTech.GetPassByIndex(p);

foreach (var sphere in _spheres) {

sphere.DrawShadow(ImmediateContext, pass, viewProj);

}

}

int stride = Basic32.Stride;

ImmediateContext.Rasterizer.State = null;

ImmediateContext.InputAssembler.InputLayout = InputLayouts.Basic32;

ImmediateContext.InputAssembler.SetVertexBuffers(0, new VertexBufferBinding(_skullVB, stride, Offset));

ImmediateContext.InputAssembler.SetIndexBuffer(_skullIB, Format.R32_UInt, 0);

for (var p = 0; p < smapTech.Description.PassCount; p++) {

var world = _skullWorld;

var wit = MathF.InverseTranspose(world);

var wvp = world * viewProj;

Effects.BuildShadowMapFX.SetWorld(world);

Effects.BuildShadowMapFX.SetWorldInvTranspose(wit);

Effects.BuildShadowMapFX.SetWorldViewProj(wvp);

Effects.BuildShadowMapFX.SetTexTransform(Matrix.Scaling(1, 2, 1));

smapTech.GetPassByIndex(p).Apply(ImmediateContext);

ImmediateContext.DrawIndexed(_skullIndexCount, 0, 0);

}

} catch (Exception ex) {

Console.WriteLine(ex.Message);

}

}

// BasicModelInstance class public void DrawShadow(DeviceContext dc, EffectPass effectPass, Matrix viewProj) { var world = World; var wit = MathF.InverseTranspose(world); var wvp = world * viewProj; Effects.BuildShadowMapFX.SetWorld(world); Effects.BuildShadowMapFX.SetWorldInvTranspose(wit); Effects.BuildShadowMapFX.SetWorldViewProj(wvp); for (int i = 0; i < Model.SubsetCount; i++) { effectPass.Apply(dc); Model.ModelMesh.Draw(dc, i); } }

Shader Code

We will need to create a new shader for rendering the shadow map depth information, as well as modify our existing Basic and NormalMapping shaders to support shadow maps. Our shader to render the scene depth information is relatively simple; for the purposes of illustration, I’m going to assume we are not using displacement mapping, which makes the shader code much more simple. Our BuildShadowMap.fx shader looks like this:

cbuffer cbPerFrame

{

float3 gEyePosW;

}

cbuffer cbPerObject

{

float4x4 gWorld;

float4x4 gWorldInvTranspose;

float4x4 gViewProj;

float4x4 gWorldViewProj;

float4x4 gTexTransform;

};

Texture2D gDiffuseMap;

SamplerState samLinear

{

Filter = MIN_MAG_MIP_LINEAR;

AddressU = Wrap;

AddressV = Wrap;

};

struct VertexIn

{

float3 PosL : POSITION;

float3 NormalL : NORMAL;

float2 Tex : TEXCOORD;

};

struct VertexOut

{

float4 PosH : SV_POSITION;

float2 Tex : TEXCOORD;

};

VertexOut VS(VertexIn vin)

{

VertexOut vout;

vout.PosH = mul(float4(vin.PosL, 1.0f), gWorldViewProj);

vout.Tex = mul(float4(vin.Tex, 0.0f, 1.0f), gTexTransform).xy;

return vout;

}

void PS(VertexOut pin)

{

float4 diffuse = gDiffuseMap.Sample(samLinear, pin.Tex);

// Don't write transparent pixels to the shadow map.

clip(diffuse.a - 0.15f);

}

RasterizerState Depth

{

DepthBias = 10000;

DepthBiasClamp = 0.0f;

SlopeScaledDepthBias = 1.0f;

};

technique11 BuildShadowMapTech

{

pass P0

{

SetVertexShader( CompileShader( vs_4_0, VS() ) );

SetGeometryShader( NULL );

SetPixelShader( NULL );

SetRasterizerState(Depth);

}

}

technique11 BuildShadowMapAlphaClipTech

{

pass P0

{

SetVertexShader( CompileShader( vs_4_0, VS() ) );

SetGeometryShader( NULL );

SetPixelShader( CompileShader( ps_4_0, PS() ) );

}

}

The vertex shader used here is dead simple – since we only care about the depth, the only operation we have to do is to transform the input vertices using the light’s combined WVP matrix. For most of our objects, we will be using the non-alpha clip technique, so the pixel shader will not even be run (we would use the alpha-clip technique for objects like our tree billboards, or the wire box from the blend demo.).

The RasterizerState block declared here bears some further explanation, however. Because our shadow map, in most cases, will not be rendered from the same perspective as our camera is viewing the scene from, and because the resolution of the shadow map is finite, there may not be a one-to-one correspondence between shadow map texels and screen pixels; rather, a shadow map texel will represent some area of the scene. This can result in some aliasing, often called shadow acne, where some pixels that should not be shadowed will appear shadowed. To avoid this artifact, we can apply a small bias to the depth values generated when we create the shadow map. This is sort of fiddly; too little bias will result in shadow acne, while too much will result in what is called “peter panning,” i.e. the shadow becomes detached from the base of the object which casts it. Additionally, we will want to vary our bias depending on the slope of the surface we are rendering: steeply sloped faces need larger bias values than flat faces for a decent resulting shadow map. Fortunately, using SlopeScaledDepthBias, the graphics hardware can compute an appropriate bias amount for us. For further details, see the comments in the source code, and read through the explanation on MSDN.

Calculating the Shadow Factor in our NormalMap.fx Shader

In our normal rendering shader effect, we need to add some code to our vertex shader to compute the projected shadow map texture coordinates for each vertex, using the transformation matrix that we constructed in the BuildShadowTransform() function.

struct VertexOut { float4 PosH : SV_POSITION; float3 PosW : POSITION; float3 NormalW : NORMAL; float3 TangentW : TANGENT; float2 Tex : TEXCOORD0; float4 ShadowPosH : TEXCOORD1; }; VertexOut VS(VertexIn vin) { VertexOut vout; // Transform to world space space. vout.PosW = mul(float4(vin.PosL, 1.0f), gWorld).xyz; vout.NormalW = mul(vin.NormalL, (float3x3)gWorldInvTranspose); vout.TangentW = mul(vin.TangentL, (float3x3)gWorld); // Transform to homogeneous clip space. vout.PosH = mul(float4(vin.PosL, 1.0f), gWorldViewProj); // Output vertex attributes for interpolation across triangle. vout.Tex = mul(float4(vin.Tex, 0.0f, 1.0f), gTexTransform).xy; // Generate projective tex-coords to project shadow map onto scene. vout.ShadowPosH = mul(float4(vin.PosL, 1.0f), gShadowTransform); return vout; }

Then, in our pixel shader, we need to determine whether the pixel being processed is in shadow, and adjust the colors returned by our lighting equations appropriately. Here, a shadow factor of 1.0 means the pixel is fully lit, and 0 means that it is fully in shadow.

// PS() // normal mapping code omitted float4 litColor = texColor; if( gLightCount > 0 ) { // Start with a sum of zero. float4 ambient = float4(0.0f, 0.0f, 0.0f, 0.0f); float4 diffuse = float4(0.0f, 0.0f, 0.0f, 0.0f); float4 spec = float4(0.0f, 0.0f, 0.0f, 0.0f); // Only the first light casts a shadow. float3 shadow = float3(1.0f, 1.0f, 1.0f); shadow[0] = CalcShadowFactor(samShadow, gShadowMap, pin.ShadowPosH); // Sum the light contribution from each light source. [unroll] for(int i = 0; i < gLightCount; ++i) { float4 A, D, S; ComputeDirectionalLight(gMaterial, gDirLights[i], bumpedNormalW, toEye, A, D, S); ambient += A; diffuse += shadow[i]*D; spec += shadow[i]*S; }

As we mentioned, we will want to use a filtered value when determining the shadow factor for a pixel, to soften the edges of our shadows. In this case, we are using a 3x3 box filter to sample the pixel location, as well as the 8 surrounding shadow map texels. The SampleCmpLevelZero HLSL function samples a texel from the first mipmap level of the texture and then compares the sampled value to a reference value (depth, in this case), and returns either 0, if the sampled value fails the comparison, or 1, if the sampled value passes.

SamplerComparisonState samShadow

{

Filter = COMPARISON_MIN_MAG_LINEAR_MIP_POINT;

AddressU = BORDER;

AddressV = BORDER;

AddressW = BORDER;

BorderColor = float4(0.0f, 0.0f, 0.0f, 0.0f);

ComparisonFunc = LESS;

};

static const float SMAP_SIZE = 2048.0f; static const float SMAP_DX = 1.0f / SMAP_SIZE; float CalcShadowFactor(SamplerComparisonState samShadow, Texture2D shadowMap, float4 shadowPosH) { // Complete projection by doing division by w. shadowPosH.xyz /= shadowPosH.w; // Depth in NDC space. float depth = shadowPosH.z; // Texel size. const float dx = SMAP_DX; float percentLit = 0.0f; const float2 offsets[9] = { float2(-dx, -dx), float2(0.0f, -dx), float2(dx, -dx), float2(-dx, 0.0f), float2(0.0f, 0.0f), float2(dx, 0.0f), float2(-dx, +dx), float2(0.0f, +dx), float2(dx, +dx) }; [unroll] for(int i = 0; i < 9; ++i) { percentLit += shadowMap.SampleCmpLevelZero(samShadow, shadowPosH.xy + offsets[i], depth).r; } return percentLit /= 9.0f; }

For directional lights, as we are using in this example, the first step, the divide by w, is not strictly necessary; it is included so that we can use the same shader for both directional lights, which use an orthographic projection, and spot lights, which use a perspective projection.

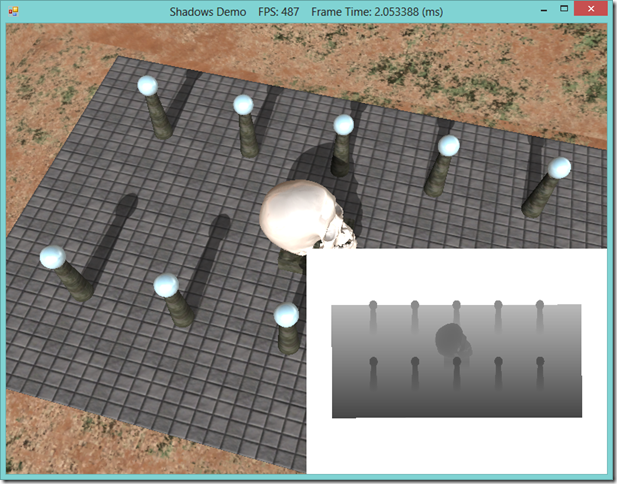

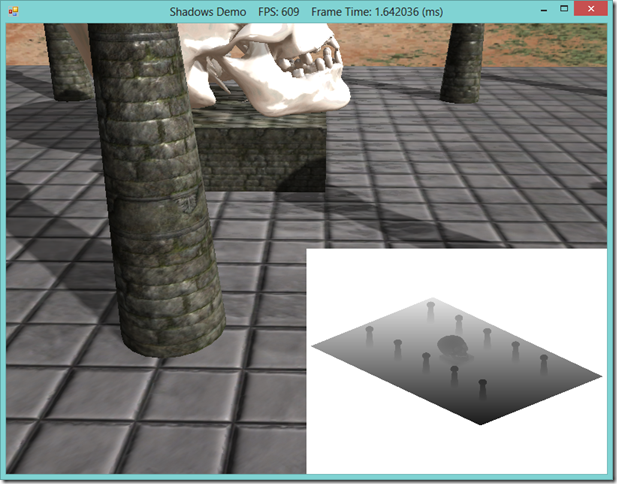

Results

In the screenshots shown, we are also drawing the shadow map depth texture to a quad in the lower right-hand corner of the window, just to visualize what the depth buffer looks like and check our results. I’m not going to go into how this quad is rendered, since it doesn’t really have anything to do with shadow mapping. At some point in the near future, I will discuss the technique, when I get around to implementing a terrain minimap.

One other thing to note, is that if you were to use the displacement mapping shader along with shadow mapping, you would need to alter the BuildShadowMap.fx shader to perform the same tessellation and displacement mapping as when rendering your objects to the back buffer, in order to generate the proper shadow map. To keep things simple, I skipped over the additional shaders and techniques defined in the effect to use displacement mapping.

Next Time…

We’ll be on to our final chapter in Mr. Luna’s book, on screen-space ambient occlusion. Thus far, we have been using a simple ambient term in our lighting equations, to simulate the indirect light that is bounced around off other objects in the scene. This very simple ambient lighting works ok, but it is a little simplistic, since it is always the same, and produces a flat color. More realistic lighting models that accounts for light rays bouncing off of objects, such as ray tracing and path tracing are still, despite the speedups in hardware these days, too slow for real-time rendering. Screen space ambient occlusion is not physically accurate, but it produces fairly good results with good performance, and it is becoming a fairly standard technique in recent AAA games.