Geometry Shader Billboards with SlimDX and DirectX 11

When I first learned about programming DirectX using shaders, it was back when DirectX 9 was the newest thing around. Back then, there were only two stages in the shader pipeline, the Vertex and Pixel shaders that we have been utilizing thus far. DirectX 10 introduced the geometry shader, which allows us to modify entire geometric primitives on the hardware, after they have gone through the vertex shader.

One application of this capability is rendering billboards. Billboarding is a common technique for rendering far-off objects or minor scene details, by replacing a full 3D object with a texture drawn to a quad that is oriented towards the viewer. This is much less performance-intensive, and for far-off objects and minor details, provides a good-enough approximation. As an example, many games use billboarding to render grass or other foliage, and the Total War series renders far-away units as billboard sprites (In Medieval Total War II, you can see this by zooming in and out on a unit; at a certain point, you’ll see the unit “pop”, which is the point where the Total War engine switches from rendering sprite billboards to rendering the full 3D model). The older way of rendering billboards required one to maintain a dynamic vertex buffer of the quads for the billboards, and to transform the vertices to orient towards the viewer on the CPU whenever the camera moved. Dynamic vertex buffers have a lot of overhead, because it is necessary to re-upload the geometry to the GPU every time it changes, along with the additional overhead of uploading four vertices per billboard. Using the geometry shader, we can use a static vertex buffer of 3D points, with only a single vertex per billboard, and expand the point to a camera-aligned quad in the geometry shader.

We’ll illustrate this technique by porting the TreeBillboard example from Chapter 11 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. This demo builds upon our previous Alpha-blending example, adding some tree billboards to the scene. You can download the full code for this example from my GitHub repository, at https://github.com/ericrrichards/dx11.git under the TreeBillboardDemo project.

TreePointSprite Vertex Type

We will create a new vertex type for our tree billboards. Since we are using the vertex shader to expand out a point into a quad, we need only store the center-point of the billboard, and the height and width of the quad. Note that the SIZE semantic we use for the billboard size is not one that is defined to have any particular meaning in HLSL (http://msdn.microsoft.com/en-us/library/windows/desktop/bb509647(v=vs.85).aspx); it’s primary role, so far as I have been able to determine, is simply to map the C# struct field with the HLSL struct field.

HLSL struct:

struct VertexIn

{

float3 PosW : POSITION;

float2 SizeW : SIZE;

};

C# struct:

[StructLayout(LayoutKind.Sequential)] public struct TreePointSprite { public Vector3 Pos; public Vector2 Size; public static readonly int Stride = Marshal.SizeOf(typeof(TreePointSprite)); } public static class InputLayoutDescriptions { // other inputLayouts omitted... public static readonly InputElement[] TreePointSprite = { new InputElement("POSITION", 0, Format.R32G32B32_Float, 0, 0, InputClassification.PerVertexData, 0 ), new InputElement("SIZE", 0, Format.R32G32_Float, 12,0,InputClassification.PerVertexData, 0) }; }

TreeSprite.fx

We will need to create a new effect file to render our tree billboards. We’ll start by looking at the parameters we will need to set for our constant buffers.

cbuffer cbPerFrame

{

DirectionalLight gDirLights[3];

float3 gEyePosW;

float gFogStart;

float gFogRange;

float4 gFogColor;

};

cbuffer cbPerObject

{

float4x4 gViewProj;

Material gMaterial;

};

cbuffer cbFixed

{

//

// Compute texture coordinates to stretch texture over quad.

//

float2 gTexC[4] =

{

float2(0.0f, 1.0f),

float2(0.0f, 0.0f),

float2(1.0f, 1.0f),

float2(1.0f, 0.0f)

};

};

// Nonnumeric values cannot be added to a cbuffer.

Texture2DArray gTreeMapArray;

SamplerState samLinear

{

Filter = MIN_MAG_MIP_LINEAR;

AddressU = CLAMP;

AddressV = CLAMP;

};

Our per-frame parameters should look very familiar, as they are the same as we have been using in Basic.fx. Likewise, our perObject parameters should be self-explanatory, although we can dispense with the world transforms that we use in Basic.fx, as our points sprites will be defined in world-space, and we will create the quad vertices in world-space in our geometry shader.

The cbFixed vertex buffer contains a constant float2 array that contains the standard texture coordinates we will use for mapping our billboard texture to the generated quad. Our gTreeMapArray is a new type for us, Texture2DArray, which is simply an array of textures that we can index into using a variable index. If we were to simply define an array of Texture2D, we would not be able to use a variable index into the array in our pixel shader, as the fxc compiler complains about any non-literal indexes when sampling a texture. Setting this parameter is a little more complicated than a normal texture, but we will get to that when we cover our wrapper class for this effect. Last, we define a linear filtering sampler state, which clamps texture coordinates to the [0,1] range in both the u and v directions. Strictly speaking, this isn’t really necessary, as we will not provide a way to change the texture coordinates in our gTexC array, so the texture coordinates will always be in the [0,1] range, but you would get an odd looking effect if you were to change the coordinates and ended up with tiled tree billboards.

Our vertex shader is just a simple pass-through, as our point vertices are already defined in world-space, and we will do the view-projection transform in the geometry shader when we expand the point into a quad.

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// Just pass data over to geometry shader.

vout.CenterW = vin.PosW;

vout.SizeW = vin.SizeW;

return vout;

}

Next up comes our geometry shader. First, we define the attribute [maxvertexcount(n)], which instructs the GPU the maximum number of vertices which will be generated for an input, so that it can allocate the necessary memory. We can output fewer vertices than this maximum, but we cannot output more; if we do, the GPU will ignore the excess vertices. Our geometry shader takes three parameters:

- point VertexOut gin[1] – This is the vertex which is output by our vertex shader. We declare this variable as type point, which indicates that the primitive is a point. Other options are line, triangle, lineadj, and triangleadj. The geometry shader only considers complete primitives, so, for instance, if we wanted to input a line primitive to our geometry shader, the declaration would be line VertexOut gin[2], or for a triangle, triangle VertexOut gin[3].

- uint primID : SV_PrimitiveID – This parameter is a unique identifier auto-generated for each primitive in a draw call, starting from 0. These IDs are only unique across a single draw call, thus the ID sequence restarts for each call.

- inout TriangleStream<GeoOut> triStream – This parameter is the output of our geometry shader. Since the geometry shader can output an arbitrary number of vertices (up to maxvertexcount), this is a stream, which we can think of as a List<T>. We can define either a PointStream, LineStream, of TriangleStream, depending on whether we want to output points, lines, or triangles. We add vertices to the stream by using the Append function. For lines and triangles, the stream is interpreted as a strip, i.e. for a LineStream, three output points would be rendered as a line running from p1 to p2 to p3. We can, however, simulate line or triangle lists, by using the HLSL function RestartStrip(). Using the previous three-point line, we would then need to Append p1 & p2, then call RestartStrip, then append p2 & p3. Note also that, if we output fewer vertices than are required for the output primitive type (2 vertices for a line, 3 for a triangle), the GPU will discard the incomplete primitives.

In our geometry shader, we construct a 3D frame of reference centered on our point, with the Z-axis oriented towards the viewer. Then, we take the SIZE float2 and determine the half-dimensions, so that we can properly construct the corner vertices of our quad from the center position. Lastly, we create the four vertices for the quad, assigning the world position, projected position, normal (towards the viewer) and texture coordinates (from our constant gTexC array), and add the vertex to the output stream. We also attach the primitive ID to the vertex, which we will need in the pixel shader.

// We expand each point into a quad (4 vertices), so the maximum number of vertices // we output per geometry shader invocation is 4. [maxvertexcount(4)] void GS(point VertexOut gin[1], uint primID : SV_PrimitiveID, inout TriangleStream<GeoOut> triStream) { // // Compute the local coordinate system of the sprite relative to the world // space such that the billboard is aligned with the y-axis and faces the eye. // float3 up = float3(0.0f, 1.0f, 0.0f); float3 look = gEyePosW - gin[0].CenterW; look.y = 0.0f; // y-axis aligned, so project to xz-plane look = normalize(look); float3 right = cross(up, look); // // Compute triangle strip vertices (quad) in world space. // float halfWidth = 0.5f*gin[0].SizeW.x; float halfHeight = 0.5f*gin[0].SizeW.y; float4 v[4]; v[0] = float4(gin[0].CenterW + halfWidth*right - halfHeight*up, 1.0f); v[1] = float4(gin[0].CenterW + halfWidth*right + halfHeight*up, 1.0f); v[2] = float4(gin[0].CenterW - halfWidth*right - halfHeight*up, 1.0f); v[3] = float4(gin[0].CenterW - halfWidth*right + halfHeight*up, 1.0f); // // Transform quad vertices to world space and output // them as a triangle strip. // GeoOut gout; [unroll] for(int i = 0; i < 4; ++i) { gout.PosH = mul(v[i], gViewProj); gout.PosW = v[i].xyz; gout.NormalW = look; gout.Tex = gTexC[i]; gout.PrimID = primID; triStream.Append(gout); } }

The pixel shader that we will use for our billboards is very similar to that which we use for our BasicEffect. The only difference is that we use the Primitive ID output by the vertex shader to select the texture that we will render on the billboard from our gTreeMapArray. We need to have a 3D texture coordinate to sample the correct texture; the first two coordinates are the same as for our normal texturing, while the third is the index of the texture in the texture array. We may draw more billboards in a single draw call than we have elements in the array, so we mod the primitive ID with the number of textures. Note that we also make use of alpha-clipping to “cut out” the pixels of the billboard that are transparent in the texture.

float4 PS(GeoOut pin, uniform int gLightCount, uniform bool gUseTexure, uniform bool gAlphaClip, uniform bool gFogEnabled) : SV_Target { // snip... if(gUseTexure) { // Sample texture. float3 uvw = float3(pin.Tex, pin.PrimID%4); texColor = gTreeMapArray.Sample( samLinear, uvw ); if(gAlphaClip) { // Discard pixel if texture alpha < 0.05. Note that we do this // test as soon as possible so that we can potentially exit the shader // early, thereby skipping the rest of the shader code. clip(texColor.a - 0.05f); } } // snip... }

TreeSpriteEffect Wrapped Class

We will need to create a C# wrapper class for our new billboard effect. This is largely the same process that we used to create our BasicEffect class, so I will not go into great detail on it.

One wrinkle, however, is that we will need to write a function to create the texture array that we bind to the gTreeMapArray shader variable. Neither DirectX nor SlimDX provide functionality out-of-the-box to create a texture array, so we will need to do that ourselves. We’ll create a function to do this in our Util class, CreateTexture2DArraySRV, which will load an array of textures, create the texture array, and bind it to a ShaderResourceView that we can upload to our shader.

public static ShaderResourceView CreateTexture2DArraySRV(Device device, DeviceContext immediateContext, string[] filenames, Format format, FilterFlags filter=FilterFlags.None, FilterFlags mipFilter=FilterFlags.Linear) { var srcTex = new Texture2D[filenames.Length]; for (int i = 0; i < filenames.Length; i++) { var loadInfo = new ImageLoadInformation { FirstMipLevel = 0, Usage = ResourceUsage.Staging, BindFlags = BindFlags.None, CpuAccessFlags = CpuAccessFlags.Write | CpuAccessFlags.Read, OptionFlags = ResourceOptionFlags.None, Format = format, FilterFlags = filter, MipFilterFlags = mipFilter, }; srcTex[i] = Texture2D.FromFile(device, filenames[i], loadInfo); } var texElementDesc = srcTex[0].Description; var texArrayDesc = new Texture2DDescription { Width = texElementDesc.Width, Height = texElementDesc.Height, MipLevels = texElementDesc.MipLevels, ArraySize = srcTex.Length, Format = texElementDesc.Format, SampleDescription = new SampleDescription(1, 0), Usage = ResourceUsage.Default, BindFlags = BindFlags.ShaderResource, CpuAccessFlags = CpuAccessFlags.None, OptionFlags = ResourceOptionFlags.None }; var texArray = new Texture2D(device, texArrayDesc); for (int texElement = 0; texElement < srcTex.Length; texElement++) { for (int mipLevel = 0; mipLevel < texElementDesc.MipLevels; mipLevel++) { var mappedTex2D = immediateContext.MapSubresource(srcTex[texElement], mipLevel, 0, MapMode.Read, MapFlags.None); immediateContext.UpdateSubresource( mappedTex2D, texArray, Resource.CalculateSubresourceIndex(mipLevel, texElement, texElementDesc.MipLevels) ); immediateContext.UnmapSubresource(srcTex[texElement], mipLevel); } } var viewDesc = new ShaderResourceViewDescription { Format = texArrayDesc.Format, Dimension = ShaderResourceViewDimension.Texture2DArray, MostDetailedMip = 0, MipLevels = texArrayDesc.MipLevels, FirstArraySlice = 0, ArraySize = srcTex.Length }; var texArraySRV = new ShaderResourceView(device, texArray, viewDesc); ReleaseCom(ref texArray); for (int i = 0; i < srcTex.Length; i++) { ReleaseCom(ref srcTex[i]); } return texArraySRV; }

First, we will load in the textures from the array of filenames into a temporary array, using the provided format, min/mag filter and mip filter. We use ResourceUsage.Staging and CpuAccessFlags Read & Write, as we are going to read from these textures. Next, we’ll grab the Texture2DDescription from the first element of the array of textures. We’ll use this description as a basis for the Texture2DDescription of our texture array. Note that we are assuming that the source textures all have the same dimensions; you will get odd results if the textures have differing dimensions. We set the ArraySize to the number of source textures, to indicate that this is a texture array. Then we create the new texture array from the description.

Once we have create the texture array, we need to copy the source textures into the array. We do this using the DeviceContext.MapSubresource() function, to read the source texture, and the DeviceContext.UpdateSubresouce() method to copy the texture data into the array. We need to copy each source texture, as well as all the mipmap levels for the source texture into our array. Each texture of our texture array has its own mipmap chain. Logically, we can visualize this as a 2D matrix, with the top row being each full-size texture element, and each column being the mipmap chain of the texture element; however, we cannot assume that this logical representation matches the actual memory allocation of the elements. To properly update the texture array, we need to match up the mipmap level of the source texture that we are updating with the mipmap level of the appropriate texture element in our array. To make this easier, we can use the Resource.CalculateSubResourceIndex() method.

Finally, once we have copied all the source textures and their mipmap chains into our texture array, we can bind the texture array to a ShaderResourceView, which we will return. We also need to free the memory allocated to the source textures, as well as the texture array, so that we do not leak memory.

Implementing the Demo

Starting from our previously implemented BlendDemo, there are very few changes that need to be made. In our Init() function, we need to create the tree texture array, using the Util.CreateTexture2DArraySRV function just discussed. Then, we will need to create the vertex buffer for our tree billboards, in our BuildTreeSpriteGeometryBuffers() function. This is very simple, as this geometry only consists of a vertex buffer of points. We set the position of our billboards such that they are properly situated on our land mesh, using the GetHillHeight function for the Y-coordinate of the point sprite, plus an offset to account for the height of the billboard. I have added a tweak to this code to ensure that the tree billboards are only located at points which are above the level of our water mesh, and below the upper slopes of our hill tops.

private void BuildTreeSpritesBuffers() { var v = new TreePointSprite[TreeCount]; for (int i = 0; i < TreeCount; i++) { float y; float x; float z; do { x = MathF.Rand(-75.0f, 75.0f); z = MathF.Rand(-75.0f, 75.0f); y = GetHillHeight(x, z); } while (y < -3 || y > 15.0f); y += 10.0f; v[i].Pos = new Vector3(x,y,z); v[i].Size = new Vector2(24.0f,24.0f); } var vbd = new BufferDescription { Usage = ResourceUsage.Immutable, SizeInBytes = TreePointSprite.Stride * TreeCount, BindFlags = BindFlags.VertexBuffer, CpuAccessFlags = CpuAccessFlags.None, OptionFlags = ResourceOptionFlags.None, }; _treeSpritesVB = new Buffer(Device, new DataStream(v, false, false), vbd); }

Since our tree billboards to not use alpha-blending (only alpha-clipping), we can draw them before we draw our land mesh in our DrawScene() function. I have split this code out into a helper function, DrawTreeSprites(). Drawing the tree billboards is relatively straightforward. We set out shader variables, set the PrimitiveTopology (be sure to use PrimitiveTopology.PointList) and InputLayout, then select the appropriate technique from our shader effect, based on the user’s display choice, then bind our point sprite vertex buffer and draw the billboards. The only wrinkle here is that we have the option of setting a BlendState for an effect known as Alpha-To-Coverage.

private void DrawTreeSprites(Matrix viewProj) { Effects.TreeSpriteFX.SetDirLights(_dirLights); Effects.TreeSpriteFX.SetEyePosW(_eyePosW); Effects.TreeSpriteFX.SetFogColor(Color.Silver); Effects.TreeSpriteFX.SetFogStart(15.0f); Effects.TreeSpriteFX.SetFogRange(175.0f); Effects.TreeSpriteFX.SetViewProj(viewProj); Effects.TreeSpriteFX.SetMaterial(_treeMat); Effects.TreeSpriteFX.SetTreeTextrueMapArray(_treeTextureMapArraySRV); ImmediateContext.InputAssembler.PrimitiveTopology = PrimitiveTopology.PointList; ImmediateContext.InputAssembler.InputLayout = InputLayouts.TreePointSprite; EffectTechnique treeTech; switch (_renderOptions) { case RenderOptions.Lighting: treeTech = Effects.TreeSpriteFX.Light3Tech; break; case RenderOptions.Textures: treeTech = Effects.TreeSpriteFX.Light3TexAlphaClipTech; break; case RenderOptions.TexturesAndFog: treeTech = Effects.TreeSpriteFX.Light3TexAlphaClipFogTech; break; default: throw new ArgumentOutOfRangeException(); } for (int p = 0; p < treeTech.Description.PassCount; p++) { ImmediateContext.InputAssembler.SetVertexBuffers(0, new VertexBufferBinding(_treeSpritesVB, TreePointSprite.Stride, 0)); var blendFactor = new Color4(0, 0, 0, 0); if (_alphaToCoverageEnabled) { ImmediateContext.OutputMerger.BlendState = RenderStates.AlphaToCoverageBS; ImmediateContext.OutputMerger.BlendFactor = blendFactor; ImmediateContext.OutputMerger.BlendSampleMask = -1; } treeTech.GetPassByIndex(p).Apply(ImmediateContext); ImmediateContext.Draw(TreeCount, 0); ImmediateContext.OutputMerger.BlendState = null; ImmediateContext.OutputMerger.BlendFactor = blendFactor; ImmediateContext.OutputMerger.BlendSampleMask = -1; } }

Alpha-To-Coverage

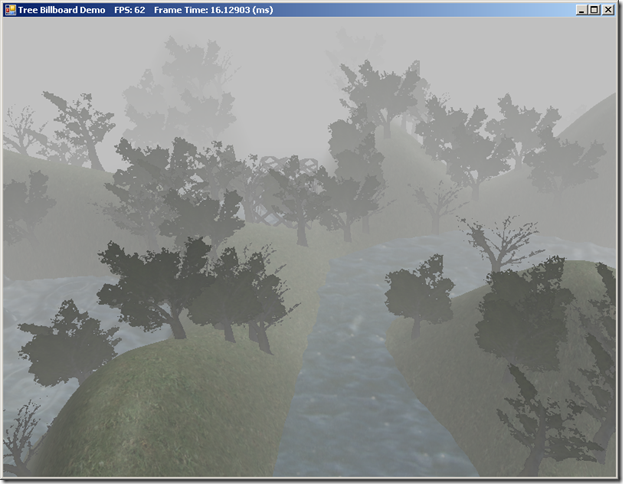

If we render the tree billboards without Alpha-to-Coverage enabled, you will notice that the farther-away billboards will be rendered with a blocky black outline.

This is because we are using the alpha-clipping function, along with linear mip-map filtering. For the closest tree billboards, we are rendering the full-size mipmap of the texture, but for the farther away billboards, we are rendering one of the smaller mipmap textures. Because of the way the linear mipmap filter works, the crisp edges of the alpha channel in the full-size texture are blended in the smaller mipmaps, so that a texel that would be fully transparent in the full-size mipmap may become partially transparent, if it is adjacent to opaque pixels, which results in the pixel passing the clip() function in the pixel shader. Because the background of our tree textures is black, this results in the black outline in the screenshot above.

We could resolve this by rendering with alpha-blending enabled, however, that would require that we draw our billboards in back-to-front order, for proper blending, which results in a lot of overhead to maintain that sorting, if we have a large number of billboards, as well as a great deal of overdraw (with blending, we cannot rely on the z-buffer to discard opaque pixels early, that will never be output to the screen).

We can use MSAA anti-aliasing to alleviate this problem with the alpha-clipping method. This will determine the color for the pixel based on the depth/stencil value of each subpixel, along with the coverage value (whether the subpixel is within or without the polygon). For solid geometry, this would work fine, however, for our billboards, the coverage value is determined using the edges of the entire quad, not just the portion that we are displaying using the alpha channel. However, DirectX provides functionality to instruct the GPU to consider the coverage value of a subpixel based on it’s alpha value, which is known as Alpha-to-Coverage, which can be enabled as part of a BlendState. We’ll add a new blend state to our RenderStates class, to handle Alpha-To-Coverage:

var atcDesc = new BlendStateDescription { AlphaToCoverageEnable = true, IndependentBlendEnable = false, }; atcDesc.RenderTargets[0].BlendEnable = false; atcDesc.RenderTargets[0].RenderTargetWriteMask = ColorWriteMaskFlags.All; AlphaToCoverageBS = BlendState.FromDescription(device, atcDesc);

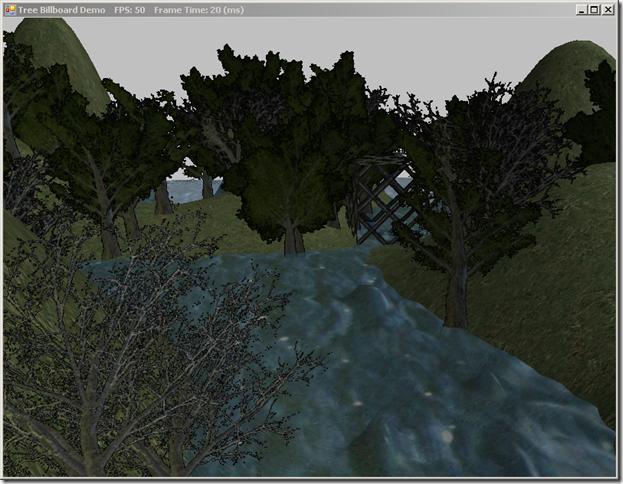

Using Alpha-to-Coverage, we get a much smoother effect at the edges of our tree billboards:

Next Time…

I’m going to skip over a few chapters in the book, covering the Compute Shader and the Tesselation Stages, as I’m not particularly interested in the examples for those chapters; I may get back to them later, but for my purposes (eventually programming an RTS/TBS game), they are not particularly interesting. So I will be skipping ahead to the third section of the book, Chapter 14, and implementing the FPS camera demo.